Catch A Wave

What is sound, anyway?

With summer fast approaching, this seems like an appropriate time to take a short break from exploring the many facets of music and focus instead on a more basic topic: sound itself.

So what is sound, anyway? Some people are surprised to learn that it’s actually two things — a physical phenomenon and a perceptual one, as the Oxford dictionary definition states:

Sound (noun): Vibrations that travel through the air or another medium and can be heard when they reach a person’s or animal’s ear.

The physical component is the vibration, and the perceptual component is how we hear the sound. The former is what we’ll be discussing here, and it is, of course, factually objective — when a vibration occurs, it occurs, no ifs, ands or buts. On the other hand, the latter is completely subjective. In other words, if your parents hear a sound that is loud and jarring, you may well perceive that same sound as being of only moderate volume and quite pleasing. This has been the basis of generational wars since time immemorial (I can distinctly remember my mother sniffily calling The Beatles’ music “noise” — as misguided a pronouncement as her contention that they would “never last”) and I talk more about that in this posting.

Musical Sound

The primal source of every sound occurs when a physical object is moved or otherwise disturbed from its resting state. In the case of musical sounds, that object might be a human vocal cord or a reed pulsating because a column of air is pushing by it, or it might be a skin or a string being struck or plucked.

In response, the gaseous or liquid molecules around the object (i.e., air or water) are shifted from their resting state, which in turn shifts the molecules around those molecules, back and forth in a fairly regular pattern. The resulting vibration causes a sound wave to be produced. This is easily observed by dropping a pebble into a pond. Ripples fan out from the central source of the disturbance, spreading far and wide until they run out of energy.

Of course, this is a highly simplified explanation of what’s actually going on. For one thing, all sounds actually consist of a series of interlocked vibrations called overtones and undertones — these are what give sounds their distinct tonal character, or timbre. In addition, musical sounds are characterized by the presence of an easily detectable predominant frequency (called the fundamental frequency), which gives it a distinctive pitch, and the overtones and undertones tend to be whole number multiples (or divisions) of that pitch. I talked about this at some length in my previous blog posting The Numbers Game.

Recorded Sound

Up until the end of the 19th century, you had to physically be within earshot of a sound in order to hear it. But by the late 1800s, thanks to the advent of recorded sound (developed by Thomas Edison, along with several other less famous innovators), millions could enjoy a concert — or at least a scratchy rendition of it — not just the few hundred people crowded into the theater or auditorium where it was performed.

As the delivery medium for recorded sound evolved from the vinyl record to magnetic tape to digital files, the processes required to get that initial vibration from its source to its final destination (our ears) have become increasingly convoluted. Devices such as the recording lathe, microphone, loudspeakers and recording/playback heads had to be invented in order to convert sound energy to electrical and magnetic energy.

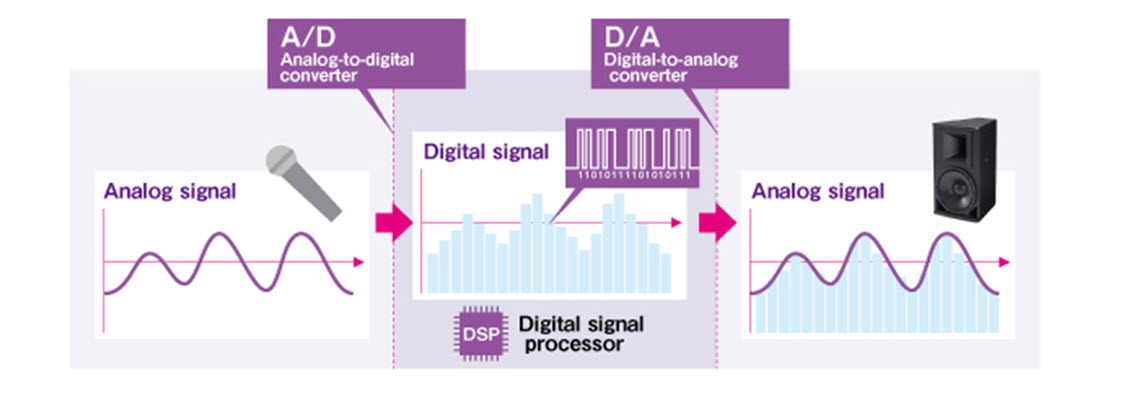

Things got even more complicated with the advent of digital recording in the 1970s — the delivery medium of choice to this very day. As shown in the illustration below, the original movement of air is converted by a microphone to an equivalent (i.e., analog) electrical signal and then sent to an electronic component called an analog-to-digital converter (A/D for short), which produces a series of equivalent ones and zeroes (digits). These can then be further processed digitally before getting stored to hard drive, flash drive, or other computer medium. From there (following any additional digital signal processing [DSP] you want to apply), the stream of digits is fed to a digital-to-analog converter (D/A for short), which converts it back to — you guessed it — an analog electrical signal, which is then routed to a loudspeaker, which converts it back to physical vibrations of air that we finally perceive as a sound.

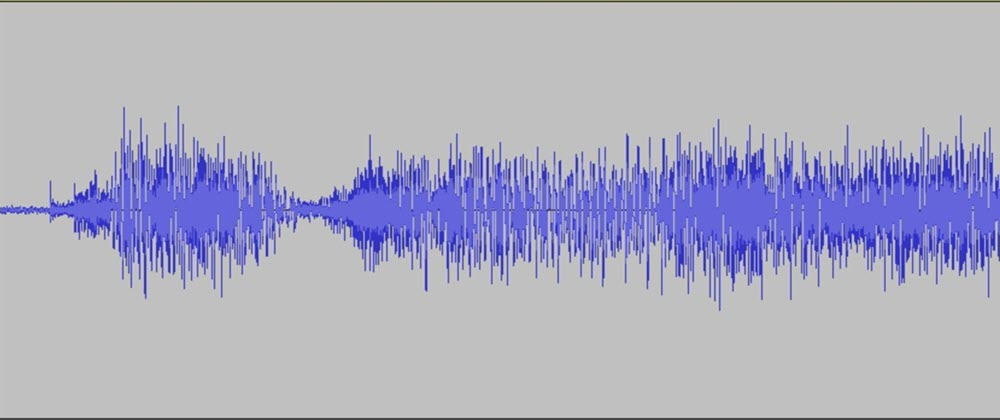

Whew! It’s amazing that what we end up hearing is even vaguely similar to the original sound … but it can actually be remarkably close. And, of course, one of the cool byproducts of digital recording is that it allows us to not only hear the sound with our ears but view the sound with our eyes. For example, here’s a wavefile of the Beach Boys singing “Catch a Wave” (not coincidentally, the title of one of their early hits):

Can you make out the three syllables? (“Hard” consonants like “k” and “tch” cause increased density in the waveform, so you should find the first one easily, but “a” and “wave” kind of flow into one another, so it’s a bit trickier.) This kind of visual feedback makes it easy to edit and “comp” performances together — something that was difficult to do on magnetic tape (since physical cutting with a razor blade or scissors was required) and completely impossible when recording direct to vinyl disk.

So if a tree falls in a forest and no one is around to hear it, does it make a sound? Well, it definitely makes a vibration (but only because it falls in a forest; if it fell in outer space, it wouldn’t even do that), but whether or not it actually makes a sound is open to debate — and that’s something I talk more about in this posting.